A brief introduction to Elixir

Building distributed and concurrent computer systems has never been easy, and it still isn't. But by using a programming language and tools designed to tackle that kind of problems makes it a lot easier to deal with.

Elixir provides us with a well proven, battle tested system programming toolkit that allows us to build fault-tolerant, performant and scalable soft real-time systems. This is the first post in a series of Elixir and Erlang related materials, and provides some context for topics to be discussed later.

So, what exactly is Elixir?Elixir itself is a relative new language in terms of programming languages. It was written by Josè Valim, as a research and development project at a company called Plataformatec and was first released in 2012.

Elixir is a functional programming language that runs on the BEAM virtual machine. It is built on top of Erlang and shares the same abstractions for building distributed and concurrent applications as Erlang do.

BEAM Virtual Machine and the OTP

BEAM is the virtual machine running at the core of the Erlang Open Telecom Platform (OTP), and is part of the Erlang Run-Time System (ERTS). BEAM stands for "Bogdan/Björn's Erlang Abstract Machine", named after Bogumil "Bogdan" Hausman, who wrote the original version. The current version is written and maintained by Björn Gustavsson.

Both Erlang and Elixir (as well as some other languages) compiles to BEAM byte code. Once compiled, the resulting .beam files are loaded into the BEAM runtime, ready to run.

OTP is a framework or set of libraries for building concurrent, fault-tolerant and distributed computer application systems running on the BEAM. Contrary to what the name suggests, neither Erlang nor the OTP is specific to telecom applications, even if that may have been the target audience back in the days.

Key components of the OTP framework today includes tools, modules and behavioursfor a wide range of common tasks such as:

- Operating system monitor tools

- Event and alarm handlers

- Various cryptographic libraries and protocols

- Compiler and debugging tools for Erlang

- Management tools for Erlang processes

- Runtime tools

Scheduling

An important task for a runtime environment is to provide resources to tasks running within the system. You'll only have a certain amount of CPU, memory and IO available on a computer, and someone has to decide what task should be able to do its thing at any given time. Scheduling is a key component of multitasking systems like Operating Systems and Virtual Machines and is usually divided into two categories:

Preemtive scheduling does context switching on running tasks and has the ability to interrupt and resume tasks at a later time (without the task itself having any control over it). This can be achieved using different strategies such as priority, time slices, number of operations and so on.

Cooperative scheduling requires the tasks to cooperate for context switching. The scheduler will let the task run until it yields control or is idle. Then it will start a new task and wait for it to return control back to the caller's thread.

Real-time systems (such as Erlang/Elixir and others) cannot use cooperative scheduling, because there is no guarantee that tasks will respond or yield control within the required time frame. So preemtive scheduling is the better choice for real-time systems.

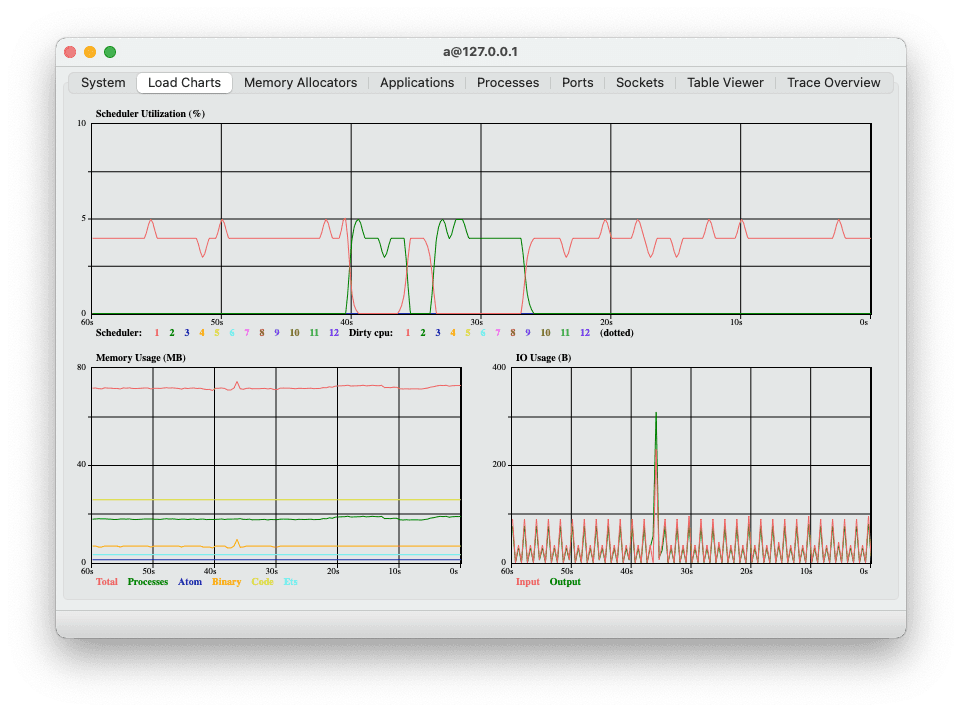

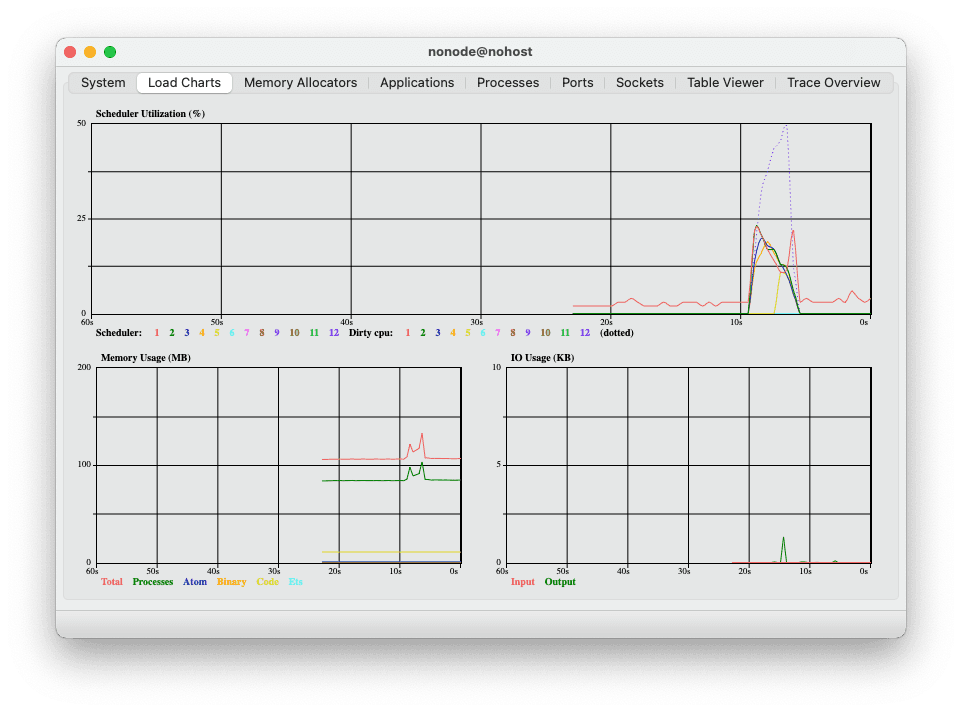

BEAM Observer displays scheduler threads utilisation

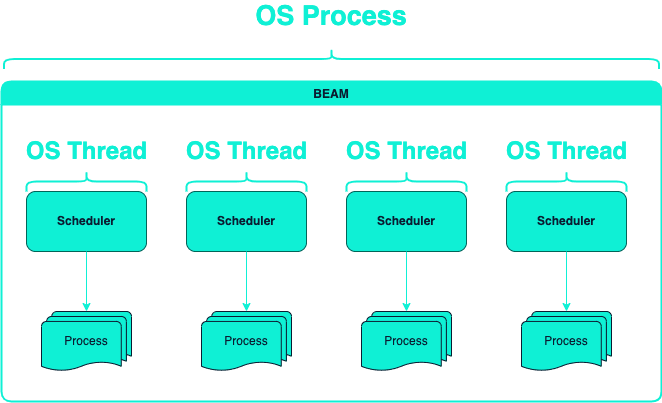

BEAM instances runs in a single OS process. For each CPU core in the computer it will spawn a thread running a separate scheduler, each having their own queues and resource management.

BEAM Scheduler threads

The scheduler is responsible for choosing which process to execute. Basically, the scheduler keeps two queues, a "ready" queue for processes that are ready to run and a "waiting" queue for processes waiting to receive a message. Once a waiting process receives a message that it should perform some kind of task, it will be placed in the ready queue. Next, the scheduler picks the first process from the ready queue and hands it over to BEAM execution with a "time slice" attached. Time slices is kind of a currency for the BEAM executioner; it will allow the process to execute until the time slice is used up. If the process is blocked before the time slice is used up, it will be put on hold so other processes can to their thing. Once they are prioritised again, they will continue where they left off.

Processes

If you ever thought threaded programming was hard, you are not alone. Then you might be happy to hear that Elixir doesn't have threads. So there you go!

Instead of threads, Elixir (or rather Erlang) use a concept called processes. They are in reality an implementation of the Actor model, which is a "mathematical model of concurrent computation" according to Wikipedia.

The gist is that an Elixir process has its own PID (Process Identifier), its own memory space (a mailbox for communication, a heap and a stack), and a PCB (Process Control Block) which contains information about the process. They can communicate amongst themselves using message passing and signals, and are very lightweight. Unlike most operating system processes, an Elixir process typically uses just a few bytes or kilobytes of memory.

iex(1)> task = Task.async(fn -> 2 * 2 end) # Spawns a new process passing a function to be executed

%Task{

owner: #PID<0.105.0>,

pid: #PID<0.107.0>,

ref: #Reference<0.3726705870.2747072513.107835>

}

iex(2)> Task.await(task, 5000) # Receive computed result with a timeout of 5000ms

4

iex(3)> Process.alive?(task.pid) # Check if the process is still alive

falseLet's take a quick look at the code above.

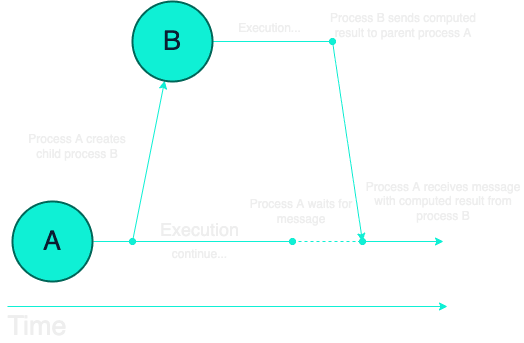

We use Task.async/1, passing a function which is to be executed in parallel. Now we can continue computation in the parent process until we are ready to receive the result from the spawned process. Once we Task.await/2 to receive the message, the spawned process will die.

Visualisation of a process fork

All code execution in Elixir is done within a process, and a BEAM node can have many (really many) processes running at the same time. Since Elixir doesn't use threads (and therefore the OS doesn't have to do memory management and expensive context switching), the Actor model gives us huge benefits for high performance parallel computing.

For fun we can calculate some numbers in parallel. Let's take the number range 1 - 100 000, multiply each value with itself, then calculate the sum.

iex(1)> f = fn ->

...(1)> 1..100_000

...(1)> |> Enum.map(fn n -> Task.async(fn -> n * n end) end)

...(1)> |> Enum.map(&Task.await/1)

...(1)> |> Enum.sum()

...(1)> end

iex(2)> :timer.tc(f)

{3109072, 333338333350000}Each multiplication in the range will execute in its own process, then awaited on and summarised. On my computer we can see that the entire process takes 3109072µs which translates to roughly 3.1 seconds and the sum is 333338333350000.

Load chart for BEAM Scheduler while calculating 100 000 numbers

I think that's pretty neat. Imagine having to spawn 100 000 threads, which shares memory and has to be managed by your OS. Not ideal :)

Summary

I hope this article served its purpose, that you now have a superficial idea of the inner workings of Elixir, the BEAM runtime and processes.

By utilizing the mechanisms discussed, we have tools to build highly concurrent, distributed computer systems. Elixir processes and the Actor model combined with a preemptive concurrent runtime allows us to spread the load over all CPU cores in our machines and leaves the system responsive even under heavy load.

In the next article we shall take a look at how to implement a concurrent network pinger using some common OTP concepts.

If you have any comments or questions, please reach out to me at rolf.havard.blindheim@intility.no.

if (wantUpdates == true) {followIntilityOnLinkedIn();}