If you haven't read part 1 of this article series, I recommend you to do so before continuing here.

In the previous article we wrote a skeleton for the public api functions in the application context module, and set up the root supervision tree. In this part we'll focus on the "Subnet Manager" GenServer that will be responsible for launching ping request tasks for hosts in a subnet and keep track on which hosts that's up or down.

Let's crack out some code!

Create a new file <highlight-mono>lib/ping_machine/subnet_manager.ex<highlight-mono> and open it up in your favorite text editor. Write or paste the following code.

Let's go through what we just did here.

At the very first line we define a module called <highlight-mono>PingMachine.SubnetManager<highlight-mono>.

Next we use GenServer. Just like we need to require stuff to expand macros in other modules, the use macro injects code from other modules into ours.

This means that when we <highlight-mono>use GenServer<highlight-mono>, functions, callbacks, imports, and so on from the GenServer module will be directly accessible in our module. Good! Now, the GenServer behaviour defines certain callback functions we can implement to make it useful for us. We'll use quite a few of them, but for now we'll focus on <highlight-mono>start_link/1<highlight-mono> and <highlight-mono>init/1<highlight-mono>.

Technically not a GenServer callback function, but this function will be called by the DynamicSupervisor whenever we start a new SubnetManager. The only thing we'll do in this function is to actally start the <highlight-mono>PingMachine.SubnetManager<highlight-mono> GenServer by calling the <highlight-mono>GenServer.start_link/3<highlight-mono> function. This function takes the GenServer module that's to be started as the first argument, an init argument, and list of options as the third argument. By supplying __MODULE__, we pass the current module, aka <highlight-mono>PingMachine.SubnetManager<highlight-mono>, as the first argument. Next we pass subnet, which is a reference to the argument passed to the function, and finally we pass a name through the <highlight-mono>via_tuple/1<highlight-mono> function we have defined at the bottom of the file.

<info>We use via-tuples to register processes in the process storage Registry. Once the Registry is started, we can register or look up processes using a {:via, Registry, {registry, key}} tuple. As a convenience, we have defined a function to generate this tuple for us.<info>

Finally, we also use the <highlight-mono>IP.Subnet.is_subnet/1<highlight-mono> guard to ensure that the value we receive in the init argument actually is a valid subnet. Guards in Elixir are compile-time checks, so by adding this here we can be certain that this function will never be called unless the given argument passes the guard!

The <highlight-mono>init/1<highlight-mono> callback is invoked when the GenServer is started and will receive the init argument given to the <highlight-mono>GenServer.start_link/3<highlight-mono> function. Depending on return values of this function, we can make the GenServer behave in different ways, but the most common usage is to return an <highlight-mono>{:ok, state}<highlight-mono> tuple, which in turn will make the GenServer enter its event loop.

We send a message to ourself using <highlight-mono>Process.send/3<highlight-mono> that we should start pinging hosts in the subnet at once. In the next section, we'll see how to respond to that message, so we'll leave it at that for now.

Then we return the <highlight-mono>{:ok, state}<highlight-mono> tuple containing the initial state for the GenServer. We use a Map to store the state, and we need to keep track of two different values:

In the previous article we briefly talked about what GenServers are, namely processes that can keep state. This is true, but not very precise. From the documentation on GenServers:

"A GenServer is a process like any other Elixir process and it can be used to keep state, execute code asynchronously and so on. The advantage of using a generic server process (GenServer) implemented using this module is that it will have a standard set of interface functions and include functionality for tracing and error reporting."

The goal of the GenServer is to abstract away the receive loop for developers, and we never want to use <highlight-mono>receive/1<highlight-mono> inside a GenServer. Instead, the GenServer have defined callback function for us which we can implement to respond to messages being sent to it. We have four callback functions for responding to different message types.

Okay, so now we know how to respond to messages coming in. In our <highlight-mono>init/1<highlight-mono> function earlier, we invoked <highlight-mono>Process.send(self(), :start, [])<highlight-mono> to send a message to ourself that we want to start pinging hosts in the subnet at once. This message will end up in the :info "inbox" in our GenServer, therefore we need to implement a <highlight-mono>handle_info/2<highlight-mono> callback function to capture it.

Let's see how we can blend in another ingredient to the potion.

Just below the <highlight-mono>init/1<highlight-mono> function in the <highlight-mono>lib/ping_machine/subnet_manager.ex<highlight-mono> file, add the two following functions:

<info>We don't really send any ping requests in this project. This is trivial to implement, but besides the point of the tutorial. We simply sleeps between 100 and 1000ms to simulate some network delay, then we randomly choose if the request was successful or not with approximately 2/3 success rate.<info>

<info>All four callback functions for the GenServer is passed the GenServers own state as the last argument. This is useful in order to inspect and access the current state. All four callbacks are also expected to return a state; either the same or a new.<info>

We'll start with this function since that's where the message sent in <highlight-mono>init/1<highlight-mono> ends up. In the function signature we can see that the function takes two arguments. The first being an atom :start_ping, and the next being the GenServer state.

Let's use this callback to fire off a new ping job for every IP address in the subnet range. Since we were clever enough to store the subnet in the state, it's easy for us to access it here. As we already know, the subnet value we have stored on the state is a valid subnet. Not only that, it is actually a pretty clever subnet. It is a <highlight-mono>IP.Subnet<highlight-mono> struct which implements the Enumerable protocol.

<info>In object oriented programming languages we often use inheritance to achieve polymorphism. Elixir uses a concept called Protocols to achieve polymorphism when we want certain behaviours depending on the type of data we're working with.<info>

The <highlight-mono>IP.Subnet<highlight-mono> struct is able to generate a list of all IP addresses in the subnet range just by us iterating over it, which is why we can pass it directly to Enum.map/2. This function takes an iterable, and a function that should be applied to all elements in the iterable.

This is pretty straight forward. We iterate over all hosts in the subnet range. For each host we invoke <highlight-mono>GenServer.cast/2<highlight-mono>, passing the "via-tuple" reference for ourself as the first argument, and <highlight-mono>{:task, host}<highlight-mono> tuple as the message. Now, since we use the <highlight-mono>GenServer.cast/2<highlight-mono> function, we are sending all these messages asynchronously, and don't care about waiting for a reply. Once we're through the list, we send a <highlight-mono>{:noreply, state}<highlight-mono> response.

Since we used <highlight-mono>GenServer.cast/2<highlight-mono> to send the message, we need to implement <highlight-mono>handle_cast/2<highlight-mono> to receive the message.

We define a <highlight-mono>handle_cast/2<highlight-mono> function where the first parameter matches the argument we passed in the <highlight-mono>handle_info(:start_ping, state)<highlight-mono> function. The last argument is the GenServer state as always.

This function will receive a message - one for each host in the subnet range. So for each time we receive a message in this function, we spawn a new Task using the <highlight-mono>Task.Supervisor.async_nolink/2<highlight-mono> function. We pass the <highlight-mono>PingMachine.TaskSupervisor<highlight-mono> as the first argument, and defines a function that is to be executed in the task as the second argument. The return value is a Task struct which contains some information about the process we just spawned.

Since the <highlight-mono>handle_cast/2<highlight-mono> callback function is called asynchronously, we don't actually respond with a value. This is indicated by using the :noreply atom in the return value. We update the state by adding the newly created task, along with the host and a :pending status.

Remember that we don't have a return keyword in Elixir. Elixir functions always returns the last evaluated statement in the function. That means that for our ping job function, the return value will be either an :ok or :error atom. Now it's about time to implement some callback functions to capture the outcome of the ping jobs so that we can keep track of successful and failed requests.

The potency of our brew is growing stronger! However, there's still a few ingredients missing.

We need a way to figure out which ping jobs succeeded and which failed. All Tasks we spawn from the <highlight-mono>PingMachine.SubnetManager<highlight-mono> GenServer will send back :info messages with the outcome of the job they have performed. Therefore we need to add some <highlight-mono>handle_info/2<highlight-mono> callback functions that can respond to those messages. Messages will be sent in the format {ref, response}, where the ref is a reference to the Task process, and response is whatever return value the process evaluated to.

Just below the previous <highlight-mono>handle_info/2<highlight-mono> callback function, add the following:

This is the callback function for successful ping requests. As stated above, we pattern match the function signature on the <highlight-mono>{ref, :ok}<highlight-mono> return value from the Task. In Elixir we can use pattern matching on function arguments (combined with function arity) as control flow mechanisms. In our case that means that both successful and failed ping jobs will deliver their results to separate functions. As a result we get small and clean functions which can focus on a single task. As always, we also receive the GenServer state so we can update the outcome of the current ping job.

To find the correct job to update state for, we simply pass it through Enum.find/3 and compare the ref argument with Task references we already have stored in the state. Next, add a log message for good measures, and then demonitor the process.

In Elixir we have different methods of binding processes together. One method is linking, which is bidirectional. If a linked process dies, all processes linked to it is doomed. It doesn't matter which process dies first, it's going to be a massacre and they all die!

Monitoring on the other hand, is unidirectional. A process can monitor other processes, and be notified of what's going on with them. If they die, the monitoring process might be a bit sad, but not much else.

All Tasks are monitored by default. That's how we're being notified when they are done processing their job. So when the ping job has reported that it's done with a successful outcome, we can demonitor it; we don't really care about it anymore and don't need to receive any more messages from it.

Finally we build a new task list by deleting the current task from the state, and add it back again with an updated :success status.

Next up is the callback function for failed ping requests. It's almost identical to the success function, so I won't go into much details here. Add it below the function we just wrote.

Again, we pattern match on <highlight-mono>{ref, :error}<highlight-mono>, demonitor the process and return a :noreply tuple with the updated state.

One last piece of the puzzle. Remember all Tasks are monitored? That means that if the Task itself fails, it will deliver a <highlight-mono>{:DOWN, ref, :process, pid, reason}<highlight-mono>. Let's add a callback function to catch those messages just in case.

In this final callback function we have the opportunity to handle crashed processes. We could add a log message, try to reschedule it or some other wizardry. But, since this application only sends ping requests, a missed host once in a while is probably not that big of a deal, so we'll just capture the message and do nothing.

Add this just below the :error callback function.

The final pieces of the <highlight-mono>PingMachine.SubnetManager<highlight-mono> is to add functionality so we can list successful and failed hosts for a given ping scan. We'll continue the path using callback functions, but these two functions need to actually return some data. Therefore we're going to use the <highlight-mono>handle_call/3<highlight-mono> callback functions for these.

They are simple enough; to list all hosts which succeeded the ping request, filter the GenServer state for all tasks with :success status and return their host attribute. For failed requests, filter by :failed status.

Add the following callback functions right after the <highlight-mono>init/1<highlight-mono> function near the top of the file.

The <highlight-mono>handle_call/3<highlight-mono> callback functions takes 3 arguments; a message - we're just passing either a :successful_hosts or :failed_hosts atom for this, a from argument - a tuple containing the PID and the reference of the calling process, and of course the GenServers own state (as always). Since we don't really care about who the caller is, we prefix the from argument with an underscore, indicating that we won't access that variable.

For each respective function, filter out the data we're interested in from the state and return a <highlight-mono>{:reply, response, state}<highlight-mono> tuple.

That concludes our ping machine! I you wish to take a look at the original file, it's right here!

It's time to refactor the context module so that the "public api" for the application actually do something. Open the <highlight-mono>lib/ping_machine.ex<highlight-mono> file in your text editor and refactor the <highlight-mono>start_ping/1<highlight-mono> function first.

We replace the <highlight-mono>case .. do<highlight-mono> statement with a <highlight-mono>with .. do<highlight-mono> statement. The with statements continue execution down the happy-path as long as each condition is true. If any condition fails we move down to the else block, and can pattern match on whatever values we got, then act accordingly.

So as long as we receive <highlight-mono>{:ok, subnet}<highlight-mono> from <highlight-mono>IP.Subnet.is_subnet/1<highlight-mono> and we receive <highlight-mono>{:ok, pid}<highlight-mono> from <highlight-mono>start_worker/1<highlight-mono> we log a message "Started pinging..." and returns <highlight-mono>{:ok, pid}<highlight-mono>. If any of those failed, we can log an error message telling the user what's wrong and return an appropriate return value.

As for the more mysterious <highlight-mono>start_worker/1<highlight-mono> private function, this is where we start up new <highlight-mono>PingMachine.SubnetManagers<highlight-mono> running under the DynamicSupervisor we talked about earlier. We also pass the subnet as the init-argument to the GenServer, so it know what subnet it'll be working on. This function will return the process id of the SubnetManager it just started, which is what we captured in the <highlight-mono>start_ping/1<highlight-mono> function above. We'll use this pid for further communication with the GenServer.

Now, for the <highlight-mono>stop_ping/1<highlight-mono> function, we'll refactor it to take a pid as argument instead of the subnet string. We can then pass the pid to the <highlight-mono>DynamicSupervisor.terminate_child/2<highlight-mono> to stop a child process running under it.

For the last two functions in our context module, they will list out either successful or failed hosts.

These functions also takes a pid as their only arguments, and sends a message to the <highlight-mono>PingMachine.SubnetManager<highlight-mono> GenServer with that process id. Since we're using <highlight-mono>GenServer.call/3<highlight-mono>, the messages will be received in the <highlight-mono>handle_call/3<highlight-mono> callback functions with matching signatures.

Here's a link to the final version of the context module.

Let's try to fire off a ping scan. Open a terminal and launch the interactive Elixir shell using the <highlight-mono>iex -S mix<highlight-mono> command:

Start a new ping scan:

Awesome! Let's try to list out some results.

Pretty good. Now, if you try to start another scan on the same subnet you should receive a warning. We can stop it using <highlight-mono>PingMachine.stop_ping/1<highlight-mono>.

So let's run multiple ping scans in parallel!

And get the successful hosts...

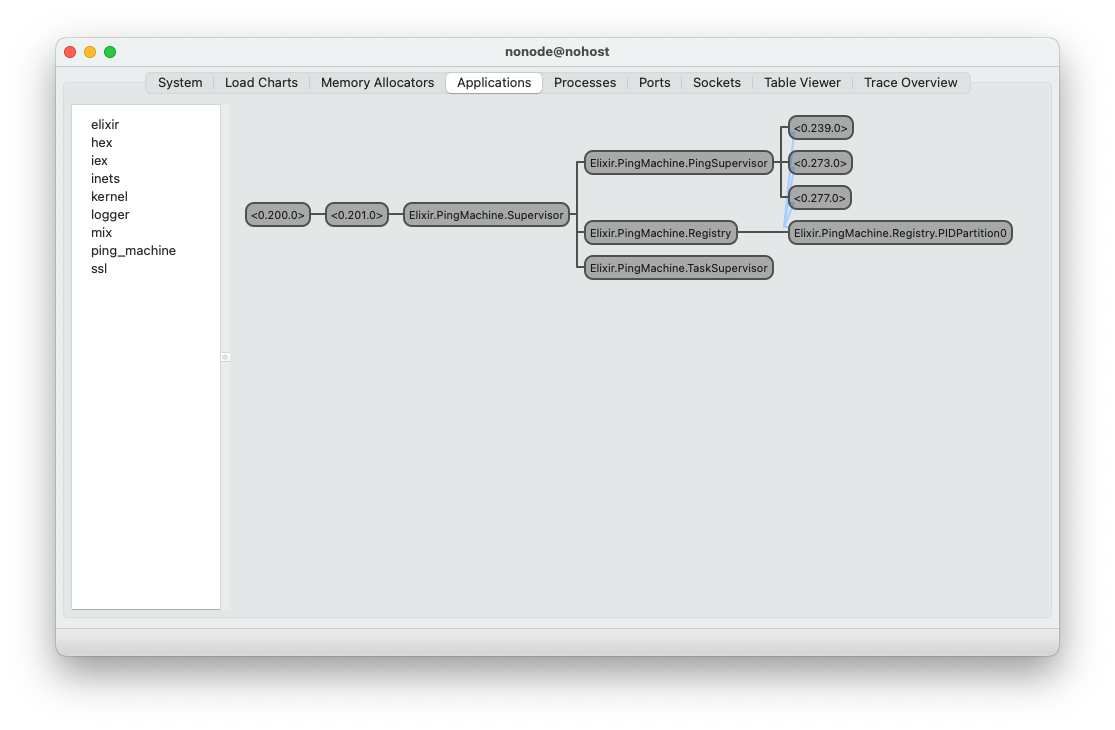

Great! We can inspect our supervision tree in Beam observer by running <highlight-mono>:observer.start<highlight-mono> and clicking the "Applications" tab.

This concludes the tutorial. We have built a concurrent network pinger from scratch using the Elixir programming language. We have used core OTP behaviours like Supervisors, GenServers and Tasks to run concurrent ping jobs in parallel, and built a simple interface for communicating with them.

If you have any comments or questions, please reach out to me at rolf.havard.blindheim@intility.no.